Recent Updates:

06-2024 : My work from my 2023 Amazon internship, ““Don’t forget to put the milk back!” Dataset for Enabling Embodied Agents to Detect Anomalous Situations,” was accep...

05-2024 : I am beginning a summer internship with Amazon Lab126! I’m looking forward to working with the team on developing exciting new capabilites for the Astro robot.

11-2023 : My paper, “Can an Embodied Agent Find Your “Cat-shaped Mug”? LLM-Guided Exploration for Zero-Shot Object Navigation,” was accepted at IEEE Robotics and Autom...

05-2023 : I am beginning a summer internship with Amazon Alexa AI!

03-2023 : My paper, “PACE: Data-Driven Virtual Agent Interaction in Dense and Cluttered Environments,” received the best paper honorable mention award at IEEE VR!

12-2022 : My paper, “PACE: Data-Driven Virtual Agent Interaction in Dense and Cluttered Environments,” was accepted at IEEE VR and will be published in a special issue...

08-2022 : My paper, “Placing Human Animations into 3D Scenes by Learning Interaction- and Geometry-Driven Keyframes,” was accepted at IEEE/CVF WACV!

04-2022 : I was selected as a recipient of the National Science Foundation Graduate Research Fellowship Program!

09-2021 : My Paper, “Communicating Inferred Goals With Passive Augmented Reality and Active Haptic Feedback” was accepted at IEEE Robotics and Automation Letters!

05-2021 : Graduated Summa Cum Laude from Virginia Tech with a B.S. in Mechanical Engineering!

Recent Projects:

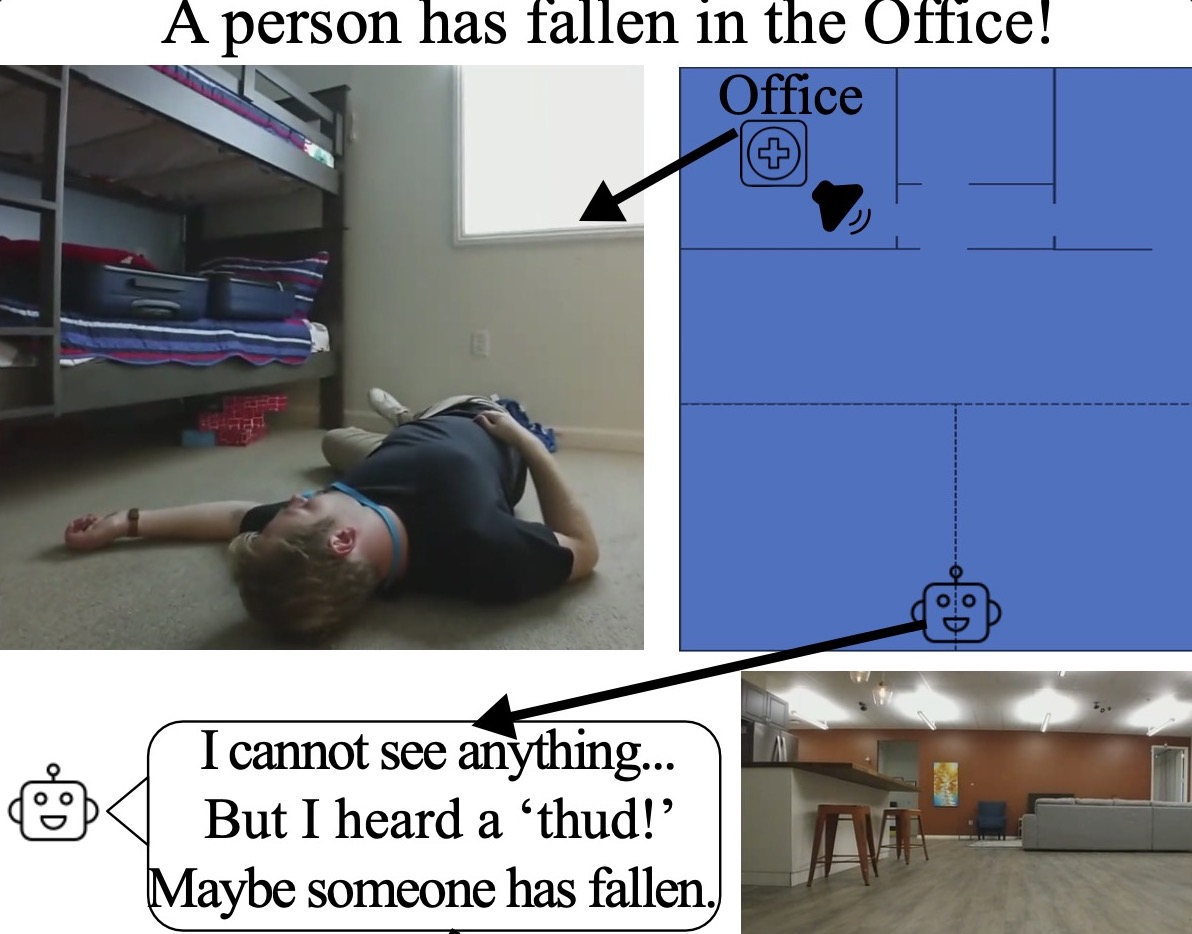

Robots and LLMs for Home Safety

Inspired by the abilities of home robots today, I’ve completed two projects at Amazon aimed at proactively detecting potential dangers in the home, and responding to actively occuring emergencies. For example, imagine your elderly family member has taken a hard fall, we want to use the sensing on the robot to determine that an emergency is occuring, find the user, assess the situation, and call emergency services if necessary.

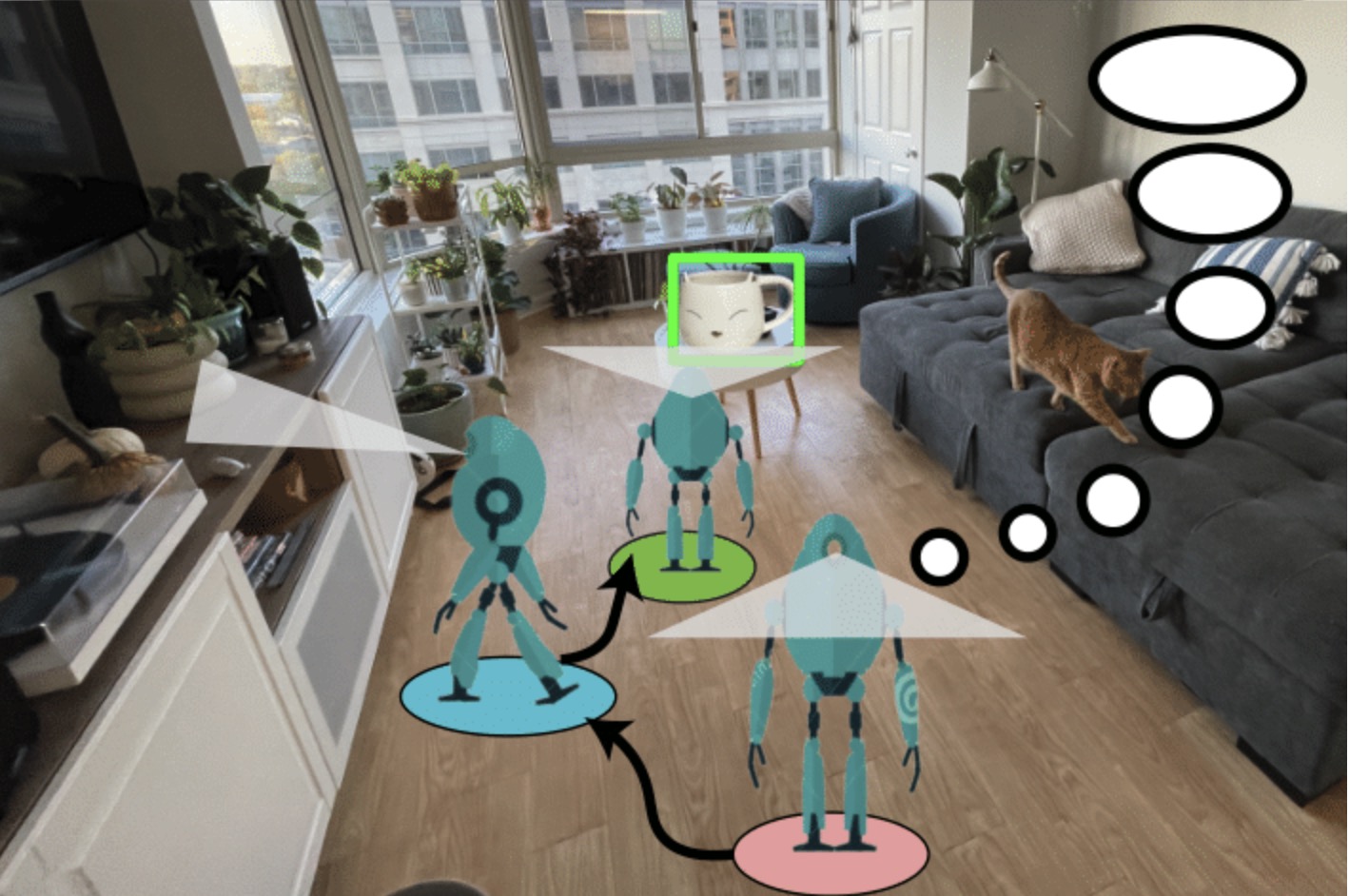

LLM-Based Zero-Shot Object Navigation

We present LGX, a novel algorithm for Object Goal Navigation in a language-driven, zero-shot manner, where an embodied agent navigates to an arbitrarily described target object in a previously unexplored environment. Our approach leverages the capabilities of Large Language Models (LLMs) for making navigational decisions by mapping the LLMs implicit knowledge about the semantic context of the environment into sequential inputs for robot motion planning.

Placing Virtual Humans into Complex Indoor Environments

Humans interact with their environment through contact. We leverage this insight to better place moving humans into complex, cluttered indoor scenes such that any actions in the humans motion is matched by the objects in the scene. For example, a sitting action will sit on a chair.

Multimodal Interfaces for Communicating Robot Learning

Robots learn as they interact with humans, but how do humans know what the robot has learned and when it needs teaching? We leverage information-rich augmented reality to passively visualize what the robot has inferred, and attention-grabbing haptic wristbands to actively prompt and direct the human’s teaching.